1 Introduction to RTOS

1.1 Understanding Realtime Operating System (RTOS)

To offer an analogy, Realtime systems work like just like a driver driving his racing car at a very high speed.

The driver will have to take the right decision fast and in time. A decision to turn left may not be a good idea after the turn has been missed.

The driver has to know his environment very well. The car would skid if the road were wet or icy.

The driver should know the capability of the car. If the driver tries to push it beyond its limits, the car will break down. And break down at high speed can lead to fatal accidents.

The driver should know what all information would the various meters in the dashboard provide him. The speed of the car, how much fuel is still left, the temperature of the engine, the air pressure in the tires, etc are vital to for a driver of such a fast car so that a valid decision can be made.

What happens if the driver turns on a wet road at very high speed? The car may have an accident. The driver of a racing car will always be prepared for such a possibility in terms of safeguards like helmets, escapes like a door that will open on one click and a recovery like an ambulance nearly.

As we study more about Realtime systems, we will notice the similarities between such a system and the driver.

Now consider you are cycling down a road that is in a very bad shape.

A quick veer to either side may be needed, just in time to avoid a pothole you had almost missed to notice. But, if you fail you will get a total-body jerk that might shake up a bit but you will not need an ambulance to recover.

Or consider your average day in front of your home PC.

You are moving the mouse and the pointer moves accordingly. Now, what happens if due to overload, the mouse is slow to respond? We will be irritated, but the world will not come crashing down. High efficient with respect to the home PC responding to mouse’s movement is desired but it is not critical.

That is the essence of the difference between a Realtime system and other systems. Fatality, as compared to irritation.

2 Some Basic Concepts and Definitions

2.1 POSIX

POSIX is an acronym that stands for Portable Operating System Interface.

The POSIX standard is heavily influenced by UNIX operating system. As the name suggests, the aim of this standard is to provide a common interface for operating systems for purpose of portability to source code level.

The following quote appears in the Introduction to POSIX.1: “The name POSIX was suggested by Richard Stallman. It is expected to be pronounced pahz-icks as in positive, not poh-six, or other variations. The pronunciation has been published in an attempt to promulgate a standardized way of referring to a standard operating system interface”.

For the sake of uniformity, we shall use POSIX interfaces in examples.

POSIX includes interfaces for:

- asynchronous I/O

- semaphores

- message queues

- memory management

- queued signals

- scheduling

- clocks and timers

For more on POSIX, please refer to http://www.pasc.org/.

Note: The meaning of terms process, thread, task or job varies from one environment to another. Therefore, it is necessary to properly define these terms as used here.

2.2 Process

A process typically refers to a “program in execution” and the set of resources associated with it. As per POSIX definition, a process is an address space with one or more threads executing within that address space, and the required system resources for those threads. Many of the system resources are shared among all of the threads within a process.

2.3 Thread

A thread typically refers to a unit flow of control that can be scheduled. A process contains at least one thread. As per POSIX definition, a thread is a single flow of control within a process. Each thread has its own thread ID, scheduling priority, Errno value, etc. All threads executing in the same process share the same address space (and hence, mess each other up).

2.4 Task

Task is not a standard term. Sometimes it refers to a process and sometimes to a thread. In Realtime systems, it often refers to a thread and that is how it is used here.

2.5 Job

Job is again not a standard term. It is often used in place of term task. We will not use this term.

2.6 Multi-Tasking

Multitasking refers to the ability of a system to execute more than one task at the same time. However, in reality, multitasking just creates the appearance of many tasks running concurrently. Actually, the tasks are interleaved rather than concurrently.

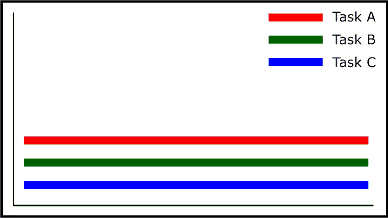

Fig 1: Task A, Task B and Task C running concurrently

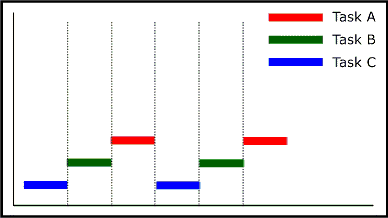

Fig 2: Task A, Task B and Task C running interleaved

2.6.1 Cooperative Multitasking

In cooperative multitasking, each task can control the CPU for as long as it needs it. Thus, the task currently controlling the CPU must offer control to other tasks. It is called cooperative because all tasks must cooperate for it to work. If one task acts like a selfish and self-centric person and does not cooperate, it can hog the CPU.

2.6.2 Preemptive Multitasking

In preemptive multitasking, the operating system allocates the CPU time slices to each task. Thus, preemptive multitasking forces tasks to share the CPU whether they want to or not.

2.7 Finite State machine

In computer science, a finite-state machine (FSM) or finite-state automaton (FSA) is an abstract concept.

A FSM can have only fixed number of conditions or states and it can go from one state to another by a fixed number of paths. All this is properly defined during the design of the FSM.

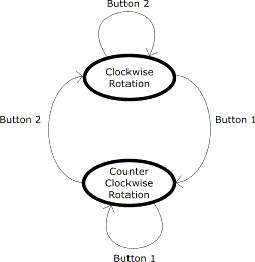

Imagine a small toy windmill whose arms are always rotating. The windmill arms can rotate in two directions, clockwise and counter-clockwise. It has a small remote that has two buttons. If one is clicked, the arms rotate in clockwise direction and if the other is clicked, they rotate in a counter-clockwise direction.

This whole system is a simple FSM. The windmill has two states and there are two transitions to move between the states. One thing to note is that a state does not have any further internal structure. That means that when an FSM is in a state, it has that state and no state has any number of sub-states it can have while in a particular state.

Fig 3: Stated and State Transitions of the Windmill

2.8 State

Operating systems keep track of the various tasks running with help of numerous parameters. One of them is the internal state of a task. This is a very common model and many operating systems use states to keep track of the internal condition of a task.

Thus, the state of a task is the last known or current status of the task.

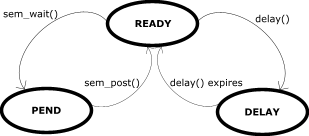

The states and their transition are different for different operating systems. However, typical states of a task that we will use here can be:

READY: The state of a task that is not waiting for any resource other than the CPU.

PEND: The state of a task that is blocked due to the unavailability of some resource (other than the CPU).

DELAY: The state of a task that has been put to sleep for some duration.

2.9 State Transitions

As we saw in the windmill example, there are always well-defined ways to change the state of a system.

The following table lists some reasons or ways we can change the state of a system:

READY --> wait for a resource --> PEND READY --> delay command --> DELAY PEND --> resource acquired --> READY DELAY --> delay expires --> READY

Fig 4: State Diagram

2.10 Preemption

Preemption is defined as the act of a higher-priority process taking control of the processor from a lower-priority task.

2.11 Context Switch

As we saw earlier that in a Multitasking system, various tasks can be interleaved and that the task switch can be preemptive. When one task preempts another task out of CPU to use the CPU for itself, the CPU is said to have undergone a context change.

Thus, context switch refers to the changes necessary in the CPU in response to preemption when the scheduler schedules a different task to run on the CPU. This involves two things:

- switching out the outgoing task, and

- switching in the incoming task

For the outgoing task, it may do typical things like saving the contents of the registers, saving the contents of the stack, etc. For an incoming task, it may load the saved values to the registers, stack, etc.

2.12 Context Switch Overhead

It takes a finite amount of time for the system to switch from one running task to a different task. This is referred to as context switching overhead.

2.13 Synchronization

Tasks typically share resources and services for which they must be prepared to wait if they are not available immediately. Synchronization is the managing the resources and tasks such that there is a fair allocation of the resource to a task.

3 Scheduling

As we saw earlier, in a multi-tasking system various tasks can be interleaved. That is, one task can run for some time and then CPU is allocated to another task and so on. We also saw that the task switch could be preemptive or cooperative.

The process of determining which task runs when in a multi-tasking system is referred to as CPU scheduling or plain scheduling. The algorithm followed to decide who gets next turn on CPU is called the scheduling algorithm. The program that does this is called the scheduler.

Thus, when we have a pool of tasks in READY state, contending for the CPU time, the job of the scheduler is to distribute the resource of the CPU to the different processes in a fair manner. The definition of fair depends on the designer of the system and varies. Based on this need of fairness, various scheduling algorithms are chosen.

There are two scheduling actions per task instance. One, when the task is context switched and it begins to execute. Another, when it completes.

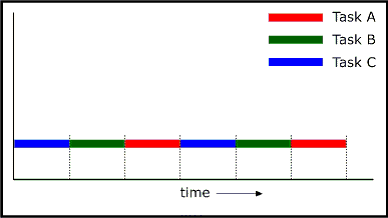

3.1 Round-Robin Scheduling

A round-robin scheduling algorithm attempts to share the CPU fairly among all Ready tasks. Round-robin scheduling achieves this by using time slicing. Each task is given CPU time for a defined interval or time slice. All tasks get an equal interval and all are executed in a rotation.

Fig 5: Round-Robin Scheduling

The disadvantage of this scheduling is that if a task of very high priority needs CPU time, it will have to wait for its turn. Since, in a Realtime system, a high priority task should be processed first, this scheduling as such does not serve the purpose.

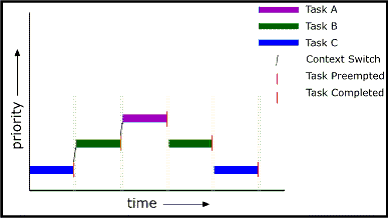

3.2 Priority Scheduling

A priority-based scheduling algorithm allocates a priority to each task. The priority level is usually in terms of a number. If there are 256 levels of priority, zero could be the highest priority level a task can have and 256 the lowest. The CPU is allocated to the highest priority task that is in READY state. It can also be called as Fixed-Priority Scheduling as the scheduler does not change the priority of a task.

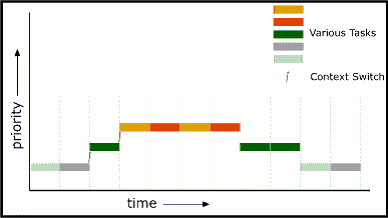

Fig 6: Priority Scheduling

Only in Fig 6, the context switch overhead has been shown explicitly between the end of a task’s run and the start of another when context switch happens for the sake of illustration.

The disadvantage of this scheduling is that if two tasks having the same priority need CPU time, the one starting earlier will starve the other task of processor time till it is done. Thus even if the second task is a high priority task, the system may not be able to complete it in time because of another equal priority task. This, this scheduling as such does not serve the purpose.

3.3 Fixed-Priority Preemptive Round-Robin Scheduling

One way of making use of the advantage of both scheduling methods without letting the disadvantages spoil Realtime-ness, is to use a mix of the two.

A Priority based Preemptive Round-Robin scheduling algorithm allocates a priority to each task. The CPU is allocated to the highest priority task that is in READY state. However, if there are more than one tasks at that priority level, they are run in round-robin manner.

Fig 7: Fixed-Priority Preemptive Round-Robin Scheduling

Most Realtime Operating Systems (RTOS) support this scheme. Now on, we will refer to it as Fixed-Priority Preemptive Scheduling only.

3.4 Rate-Monotonic Scheduling

A fixed-priority preemptive scheduling in which, the priority is decided by the scheduler based on its frequency (inverse of the period) if it is a periodic task. Thus, higher the frequency, the higher its priority is.

3.5 Dynamic-Priority Preemptive Scheduling

It is similar to Fixed-Priority Preemptive Scheduling with one basic difference. The difference is that the priority of a task can change from instance to instance or within the execution of an instance.

3.6 Deadline-Monotonic Scheduling

In Deadline-Monotonic Scheduling, the deadline of a task is a pre-fixed point in time relative to the beginning of the task. The shorter this deadline, the higher the priority so it can finish in time.

4 Inter-Task Communication

When we have multi-tasking, there is a need for tasks to communicate with each other. That maybe for the purpose of data sharing, synchronization, error handling or even exception handling.

4.1 Mutual Exclusion

Mutual Exclusion ensures that there is no contention for resource access. It ensures two tasks can not access the same resource at the same time and hence lead to unpredictability. It also ensures that there is a proper method to request and hold on and release of a resource that.

4.2 Inter-Task Communication Methods

- Shared memory

- Semaphores

- Signals

Shared Memory is the most simple method for tasks to communicate. Since all tasks share the address space, all tasks can access a given memory address.

A data structure can be used to define a memory block. By accessing this shared data structures the data can be shared between various tasks.

The flip side of this method is that it can lead to horrible errors if proper care is not taken. Let us consider one such issue.

RAW (Read After Write) error can happen when one task is writing to a memory and another task is reading from it. The ‘Read’ from the task reading should follow the ‘Write’ from the task writing. But if the task reading from that memory read it before the task writing onto it wrote any data on it. The task would end up reading invalid data. This, in a Realtime system, can lead to catastrophic failures.

Such errors can be avoided using various synchronization techniques like resource locking using semaphores, temporarily disabling interrupts or temporarily disabling preemption.

Semaphores often provide the fastest intertask communication mechanism and address the need for both mutual exclusion and synchronization.

A semaphore can be viewed as a flag or a marker or a red/green jhandi that can be used to specify if a resource is available or unavailable.

When a task tries to take a binary semaphore, the outcome depends on whether the semaphore is available or unavailable at the time of the call.

If the semaphore is available, then the semaphore becomes unavailable. It is up to this task to release the semaphore once its need is over.

If the semaphore is unavailable, the task is put on a queue of blocked tasks and enters a state of PEND on the availability of the semaphore.

4.2.2.1 Mutual Exclusion Using Binary Semaphores

Suppose at one given time, only one task is allowed to write into a memory location.

/* sample program 1 - incomplete */

int someGlobalVar;

void sometaskA(void)

{

...

/* wait for the semaphore */

sem_wait(aSemaphore);

/* got it! */

someGlobalVar = 1;

/* let go */

sem_post(aSemaphore);

...

}

void sometaskB(void)

{

...

/* wait for the semaphore */

sem_wait(aSemaphore);

/* got it! */

someGlobalVar = 2;

/* let go */

sem_post(aSemaphore);

...

}

This way when these two tasks are running in a multi-tasking environment, they will have to wait for a semaphore to be available to write into some global memory.

4.2.2.2 Synchronization Using Binary Semaphores

Suppose there is a task that produces one unit of data randomly. It can write that data into a global memory. There is another task that has been written to process the data. A semaphore can be used to synchronize these two tasks.

/* sample program 2 - incomplete */

int someGlobalVar;

void generatorTask(void)

{

while (1)

{

/* wait for the semaphore */

sem_wait(aSemaphore);

/* wait for some random time */

...

/* got it! */

someGlobalVar = 3;

/* let go */

sem_post(aSemaphore);

}

}

void processorTask(void)

{

while (1)

{

/* wait for the semaphore */

sem_wait(aSemaphore);

/* got it! that means generatorTask wrote something to someGlobalVar */

/* do something */

...

/* let go */

sem_post(aSemaphore);

}

}

The processorTask is forever waiting on the semaphore. Whenever it is available, it means that there is valid data available to process. Once it has done that, it lets go of the semaphore. In the meantime, generatorTask is already in queue to grab it. As soon as processorTask let’s go, generatorTask grabs it back and hold onto it till it has some valid data to send again.

This way when these two tasks are running in a multi-tasking environment, they can be synchronized.

Signals can be seen as software interrupts. They can asynchronously alter the control flow of a task. Any task or ISR can raise a signal for a particular task. The task that is being signaled, on receiving it immediately suspends its current thread of execution and executes the task-specified signal handler routine the next time it is scheduled to run.

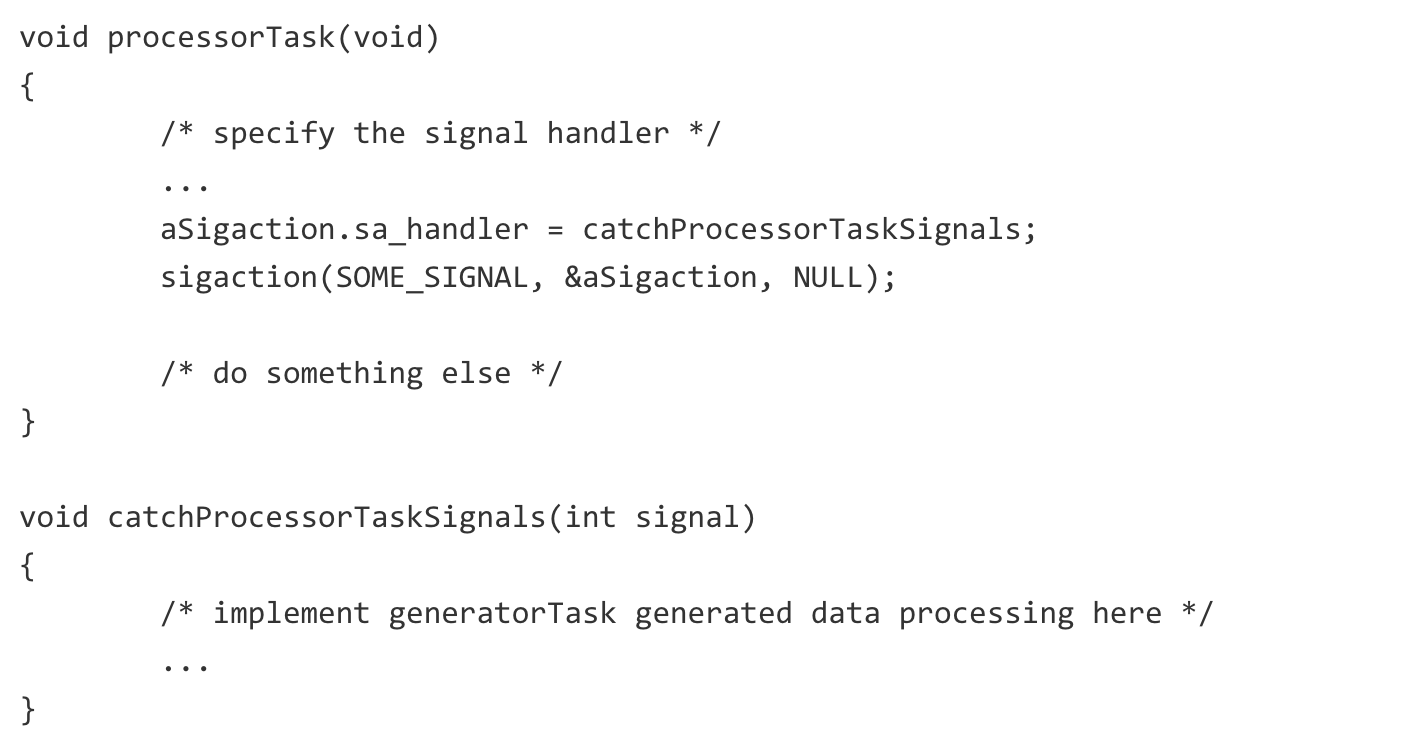

4.2.3.1 Synchronization Using Signals

Suppose there is a task that produces one unit of data randomly. It can write that data into a global memory. There is another task that has been written to process the data. A signal can be used to synchronize these two tasks.

/* sample program 3 - incomplete */

int someGlobalVar;

void generatorTask(void)

{

/* use OS specific method to get ID of the processor task */

processorID = someOSCall();

while (1)

{

/* wait for some random time */

...

/* generate data */

someGlobalVar = 3;

/* send a signal */

kill(processorID, signalNum);

}

}

void processorTask(void)

{

/* specify the signal handler */

...

aSigaction.sa_handler = catchProcessorTaskSignals;

sigaction(SOME_SIGNAL, &aSigaction, NULL);

/* do something else */

}

void catchProcessorTaskSignals(int signal)

{

/* implement generatorTask generated data processing here */

...

}

The generatorTask raises a signal as soon as the data is ready. When the processorTask was launched, it had specified a handler function for that signal. As soon as the generatorTask sends the signal, the processorTask will execute code in catchProcessorTaskSignals() inside the context of processorTask only.

This way when these two tasks are running in a multi-tasking environment, they can be synchronized.

4.3 Priority Inversion

Priority inversion arises when a higher-priority task is forced to wait for an indefinite period for a lower-priority task to complete and give up the resource.

Let us consider two tasks, taskHighPri and taskLowPri that have high and low priority, respectively. taskLowPri acquires some resource by taking its associated binary semaphore. Along comes taskHighPri and preempts taskLowPri. Now it wants to use the same resource and waits on the same semaphore that taskLowPri has taken. Since taskLowPri has been preempted, it is unable to finish what it was doing and give the semaphore. Thus, taskHighPri becomes blocked for an indefinite period.

There are ways to handle such scenarios. One way is that a task that has a resource, executes at the same priority as of the highest-priority task blocked on that resource.

So in above case, the taskLowPri’s priority is raised to that of taskHighPri. That way, having the same priority as taskHighPri, the taskLowPri preempts it (round robin followed when many tasks of same priority in READY state), finishes its work and gives up the semaphore. As soon, as it works gets done, its priority is back to normal and the situation is taken care of.